A neighbor of ours does some housework for us twice a month. We pay her the going rate, and as far as I know we’ve never imposed on her to do anything especially surprising or annoyingly beyond what we’d agreed to—some vacuuming, counter-washing and tidying up, that sort of thing.

Our relations are friendly. Yet I never talk about her. When I discuss my life, my home, our family’s ups and downs, this woman — who spends much more time in the place than most of our friends — never comes up. I am not giving her name here because I don’t have her permission to put her in pixels. Well, that’s part of it, and it applies to her first name. I am not revealing her last name because I don’t know it.

Now, it may well be that this intimate yet invisible relationship strikes her, as it did us, as a fair and reasonable trade, freely entered into. Money for work, the basic capitalist deal. But still, there is a fundamental inequality to our relations. She is doing work we don’t want to do, freeing us up to do things that we prefer—things that are more fun, or that earn some money, or maybe both. But we never switch roles. We’re never over at her house, filling her dishwasher.

And so I have to wonder whether we aren’t, in some sense, taking advantage of an unearned privilege. Our family has more money and more education and a language advantage. Would she clean our house if circumstances put her in a position to make money doing something else? Maybe she would. Maybe she wouldn’t. It’s not an answerable question. Circumstances are what they are.

Thinking of this smiling, friendly woman, whom I like but who I can’t call a friend, I think of all the unmentioned people—almost all women—whom I have not discussed, and not remarked on, as I made my way through middle class life in these past few decades. There was a part-time nanny. People who came to clean. In a less intimate circle, more men, but again, people whom we would engage to do a job and then ignore. They were in my home but not of it. And, fine, very likely most did not want to be. But the fundamental unequalness here — people doing work I chose not to do, doing work for me that otherwise they could for their own families, their own children — seldom gave me pause.

I grew up without a lot of money (no one working for us) and so I only learned this culture of invisible helpers in my 20s. You have something draining, repetitive, hard, emotionally weighty and heavy (like tending to a small child or a sick old person)—well, you find someone willing to do the work, and hire them. And then don’t talk about them. Or talk about them like a resource. Years ago, in Tennessee, I met the woman who had helped to raise my then-wife. The former nanny/housekeeper was an African-American lady of somber mien and few words. She’d been ill, my in-laws told a neighbor. “Ah, yes,” he drawled, “the same thing happened to ours.”

Occasionally, our caretakers, our burden-bearers — I almost wrote “hidden,” but they aren’t hidden, they are right there, we just don’t see them the way we see our children or our neighbors—do come up in conversation with people we treat as peers. Usually, it’s because something has gone wrong (“the nanny quit with no notice!”) or because we want to follow convention and we aren’t sure what it demands (“there’s a blizzard and she can’t get here, do I still have to pay her?”).

Now and then the subject is praise for their services. (Like the time I heard some neighborhood fathers get into how pleasant it was to come home because their servants did such a good job putting the house in order — “but when you come home early, wow! it’s chaos, am I right?”)

As a general rule, though, we keep these people invisible. And I find I think very little about how I behave with them. So, for example, I try to have dishes out of the sink and toys put away when our neighbor comes to clean, because (in theory) it’s more efficient for her to do the bigger stuff we never get to, and because (in reality) I feel like there are intimate, grinding daily life things we should do for ourselves. However, if “things have gotten out of hand” or “I just don’t have time” then I leave her with the mess. Because … well, I can. She won’t complain. No one else will either.

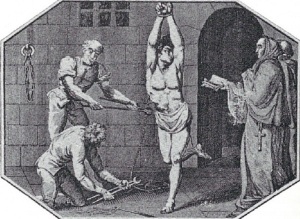

These thoughts have been prompted by this amazing piece in The Atlantic — “My Family’s Slave,” by the late Alex Tizon, a Pulitzer-prize winning reporter who, in his 50s, decided to come to terms with the fact that the woman who raised him was, effectively, enslaved. In the Philippines, Eudocia Tomas Pulido had been taken into the family by his grandfather, tasked with raising the author’s mother. She was then brought to America and forced to work for the family for generations. She raised Tizon and his siblings. She raised Tizon’s children. She had no life of her own. And his parents thought this was fine.

So he grew up in a house in which Pulido was imprisoned and abused—because that was how things were, because this sort of thing was done by families like his. And even though he saw the injustice early in life, his loyalty to his family—especially his mother—held him in check until he finally, in his middle age, he tried to make some amends.

It’s possible to read this piece as the exotic and strange story of an immigrant family. And it’s possible to read it as a metaphor for American capitalism or for the position of women in patriarchy. It is possible to condemn Tizon for not doing more, in life and in print, to give Pulido her stolen life back. There is much to be said about it. It is unsettling, and my own thoughts about it are in flux.

But one thing I think the essay inevitably does is prompt its readers to think about their own invisible caretakers, and the ways in which their family’s advantages shaped the relationship. A vast amount of work undergirds the lives of people who can read The Atlantic. The people doing that work—the nannies, housecleaners, handymen—are doing it because they are at some kind of disadvantage. It might be education, migration status, money, social capital, capital-capital, or some combination of those. So it’s worth asking if arrangements that seem well and good to us — because no one complains, because that’s how things are, because someone has to pick up little Jake while I am at work — aren’t at least a little exploitative. And if that shouldn’t affect how we see and how we treat other people whom we now barely see at all.